Merge branch 'develop' into pr/Blackhawke/3764

This commit is contained in:

4

.github/workflows/ci.yml

vendored

4

.github/workflows/ci.yml

vendored

@@ -88,7 +88,7 @@ jobs:

|

|||||||

run: |

|

run: |

|

||||||

cp config.json.example config.json

|

cp config.json.example config.json

|

||||||

freqtrade create-userdir --userdir user_data

|

freqtrade create-userdir --userdir user_data

|

||||||

freqtrade hyperopt --datadir tests/testdata -e 5 --strategy SampleStrategy --hyperopt SampleHyperOpt

|

freqtrade hyperopt --datadir tests/testdata -e 5 --strategy SampleStrategy --hyperopt SampleHyperOpt --print-all

|

||||||

|

|

||||||

- name: Flake8

|

- name: Flake8

|

||||||

run: |

|

run: |

|

||||||

@@ -150,7 +150,7 @@ jobs:

|

|||||||

run: |

|

run: |

|

||||||

cp config.json.example config.json

|

cp config.json.example config.json

|

||||||

freqtrade create-userdir --userdir user_data

|

freqtrade create-userdir --userdir user_data

|

||||||

freqtrade hyperopt --datadir tests/testdata -e 5 --strategy SampleStrategy --hyperopt SampleHyperOpt

|

freqtrade hyperopt --datadir tests/testdata -e 5 --strategy SampleStrategy --hyperopt SampleHyperOpt --print-all

|

||||||

|

|

||||||

- name: Flake8

|

- name: Flake8

|

||||||

run: |

|

run: |

|

||||||

|

|||||||

@@ -1,7 +1,7 @@

|

|||||||

FROM --platform=linux/arm/v7 python:3.7.7-slim-buster

|

FROM --platform=linux/arm/v7 python:3.7.7-slim-buster

|

||||||

|

|

||||||

RUN apt-get update \

|

RUN apt-get update \

|

||||||

&& apt-get -y install curl build-essential libssl-dev libatlas3-base libgfortran5 sqlite3 \

|

&& apt-get -y install curl build-essential libssl-dev libffi-dev libatlas3-base libgfortran5 sqlite3 \

|

||||||

&& apt-get clean \

|

&& apt-get clean \

|

||||||

&& pip install --upgrade pip \

|

&& pip install --upgrade pip \

|

||||||

&& echo "[global]\nextra-index-url=https://www.piwheels.org/simple" > /etc/pip.conf

|

&& echo "[global]\nextra-index-url=https://www.piwheels.org/simple" > /etc/pip.conf

|

||||||

|

|||||||

@@ -123,7 +123,6 @@ Telegram is not mandatory. However, this is a great way to control your bot. Mor

|

|||||||

- `/help`: Show help message

|

- `/help`: Show help message

|

||||||

- `/version`: Show version

|

- `/version`: Show version

|

||||||

|

|

||||||

|

|

||||||

## Development branches

|

## Development branches

|

||||||

|

|

||||||

The project is currently setup in two main branches:

|

The project is currently setup in two main branches:

|

||||||

|

|||||||

@@ -7,7 +7,6 @@

|

|||||||

"timeframe": "5m",

|

"timeframe": "5m",

|

||||||

"dry_run": false,

|

"dry_run": false,

|

||||||

"cancel_open_orders_on_exit": false,

|

"cancel_open_orders_on_exit": false,

|

||||||

"trailing_stop": false,

|

|

||||||

"unfilledtimeout": {

|

"unfilledtimeout": {

|

||||||

"buy": 10,

|

"buy": 10,

|

||||||

"sell": 30

|

"sell": 30

|

||||||

|

|||||||

@@ -7,7 +7,6 @@

|

|||||||

"timeframe": "5m",

|

"timeframe": "5m",

|

||||||

"dry_run": true,

|

"dry_run": true,

|

||||||

"cancel_open_orders_on_exit": false,

|

"cancel_open_orders_on_exit": false,

|

||||||

"trailing_stop": false,

|

|

||||||

"unfilledtimeout": {

|

"unfilledtimeout": {

|

||||||

"buy": 10,

|

"buy": 10,

|

||||||

"sell": 30

|

"sell": 30

|

||||||

|

|||||||

@@ -7,7 +7,6 @@

|

|||||||

"timeframe": "5m",

|

"timeframe": "5m",

|

||||||

"dry_run": true,

|

"dry_run": true,

|

||||||

"cancel_open_orders_on_exit": false,

|

"cancel_open_orders_on_exit": false,

|

||||||

"trailing_stop": false,

|

|

||||||

"unfilledtimeout": {

|

"unfilledtimeout": {

|

||||||

"buy": 10,

|

"buy": 10,

|

||||||

"sell": 30

|

"sell": 30

|

||||||

|

|||||||

@@ -157,17 +157,32 @@ A backtesting result will look like that:

|

|||||||

| ADA/BTC | 1 | 0.89 | 0.89 | 0.00004434 | 0.44 | 6:00:00 | 1 | 0 | 0 |

|

| ADA/BTC | 1 | 0.89 | 0.89 | 0.00004434 | 0.44 | 6:00:00 | 1 | 0 | 0 |

|

||||||

| LTC/BTC | 1 | 0.68 | 0.68 | 0.00003421 | 0.34 | 2:00:00 | 1 | 0 | 0 |

|

| LTC/BTC | 1 | 0.68 | 0.68 | 0.00003421 | 0.34 | 2:00:00 | 1 | 0 | 0 |

|

||||||

| TOTAL | 2 | 0.78 | 1.57 | 0.00007855 | 0.78 | 4:00:00 | 2 | 0 | 0 |

|

| TOTAL | 2 | 0.78 | 1.57 | 0.00007855 | 0.78 | 4:00:00 | 2 | 0 | 0 |

|

||||||

|

=============== SUMMARY METRICS ===============

|

||||||

|

| Metric | Value |

|

||||||

|

|-----------------------+---------------------|

|

||||||

|

| Backtesting from | 2019-01-01 00:00:00 |

|

||||||

|

| Backtesting to | 2019-05-01 00:00:00 |

|

||||||

|

| Total trades | 429 |

|

||||||

|

| First trade | 2019-01-01 18:30:00 |

|

||||||

|

| First trade Pair | EOS/USDT |

|

||||||

|

| Total Profit % | 152.41% |

|

||||||

|

| Trades per day | 3.575 |

|

||||||

|

| Best day | 25.27% |

|

||||||

|

| Worst day | -30.67% |

|

||||||

|

| Avg. Duration Winners | 4:23:00 |

|

||||||

|

| Avg. Duration Loser | 6:55:00 |

|

||||||

|

| | |

|

||||||

|

| Max Drawdown | 50.63% |

|

||||||

|

| Drawdown Start | 2019-02-15 14:10:00 |

|

||||||

|

| Drawdown End | 2019-04-11 18:15:00 |

|

||||||

|

| Market change | -5.88% |

|

||||||

|

===============================================

|

||||||

```

|

```

|

||||||

|

|

||||||

|

### Backtesting report table

|

||||||

|

|

||||||

The 1st table contains all trades the bot made, including "left open trades".

|

The 1st table contains all trades the bot made, including "left open trades".

|

||||||

|

|

||||||

The 2nd table contains a recap of sell reasons.

|

|

||||||

This table can tell you which area needs some additional work (i.e. all `sell_signal` trades are losses, so we should disable the sell-signal or work on improving that).

|

|

||||||

|

|

||||||

The 3rd table contains all trades the bot had to `forcesell` at the end of the backtest period to present a full picture.

|

|

||||||

This is necessary to simulate realistic behaviour, since the backtest period has to end at some point, while realistically, you could leave the bot running forever.

|

|

||||||

These trades are also included in the first table, but are extracted separately for clarity.

|

|

||||||

|

|

||||||

The last line will give you the overall performance of your strategy,

|

The last line will give you the overall performance of your strategy,

|

||||||

here:

|

here:

|

||||||

|

|

||||||

@@ -196,6 +211,58 @@ On the other hand, if you set a too high `minimal_roi` like `"0": 0.55`

|

|||||||

(55%), there is almost no chance that the bot will ever reach this profit.

|

(55%), there is almost no chance that the bot will ever reach this profit.

|

||||||

Hence, keep in mind that your performance is an integral mix of all different elements of the strategy, your configuration, and the crypto-currency pairs you have set up.

|

Hence, keep in mind that your performance is an integral mix of all different elements of the strategy, your configuration, and the crypto-currency pairs you have set up.

|

||||||

|

|

||||||

|

### Sell reasons table

|

||||||

|

|

||||||

|

The 2nd table contains a recap of sell reasons.

|

||||||

|

This table can tell you which area needs some additional work (e.g. all or many of the `sell_signal` trades are losses, so you should work on improving the sell signal, or consider disabling it).

|

||||||

|

|

||||||

|

### Left open trades table

|

||||||

|

|

||||||

|

The 3rd table contains all trades the bot had to `forcesell` at the end of the backtesting period to present you the full picture.

|

||||||

|

This is necessary to simulate realistic behavior, since the backtest period has to end at some point, while realistically, you could leave the bot running forever.

|

||||||

|

These trades are also included in the first table, but are also shown separately in this table for clarity.

|

||||||

|

|

||||||

|

### Summary metrics

|

||||||

|

|

||||||

|

The last element of the backtest report is the summary metrics table.

|

||||||

|

It contains some useful key metrics about performance of your strategy on backtesting data.

|

||||||

|

|

||||||

|

```

|

||||||

|

=============== SUMMARY METRICS ===============

|

||||||

|

| Metric | Value |

|

||||||

|

|-----------------------+---------------------|

|

||||||

|

| Backtesting from | 2019-01-01 00:00:00 |

|

||||||

|

| Backtesting to | 2019-05-01 00:00:00 |

|

||||||

|

| Total trades | 429 |

|

||||||

|

| First trade | 2019-01-01 18:30:00 |

|

||||||

|

| First trade Pair | EOS/USDT |

|

||||||

|

| Total Profit % | 152.41% |

|

||||||

|

| Trades per day | 3.575 |

|

||||||

|

| Best day | 25.27% |

|

||||||

|

| Worst day | -30.67% |

|

||||||

|

| Avg. Duration Winners | 4:23:00 |

|

||||||

|

| Avg. Duration Loser | 6:55:00 |

|

||||||

|

| | |

|

||||||

|

| Max Drawdown | 50.63% |

|

||||||

|

| Drawdown Start | 2019-02-15 14:10:00 |

|

||||||

|

| Drawdown End | 2019-04-11 18:15:00 |

|

||||||

|

| Market change | -5.88% |

|

||||||

|

===============================================

|

||||||

|

|

||||||

|

```

|

||||||

|

|

||||||

|

- `Total trades`: Identical to the total trades of the backtest output table.

|

||||||

|

- `First trade`: First trade entered.

|

||||||

|

- `First trade pair`: Which pair was part of the first trade.

|

||||||

|

- `Backtesting from` / `Backtesting to`: Backtesting range (usually defined with the `--timerange` option).

|

||||||

|

- `Total Profit %`: Total profit per stake amount. Aligned to the TOTAL column of the first table.

|

||||||

|

- `Trades per day`: Total trades divided by the backtesting duration in days (this will give you information about how many trades to expect from the strategy).

|

||||||

|

- `Best day` / `Worst day`: Best and worst day based on daily profit.

|

||||||

|

- `Avg. Duration Winners` / `Avg. Duration Loser`: Average durations for winning and losing trades.

|

||||||

|

- `Max Drawdown`: Maximum drawdown experienced. For example, the value of 50% means that from highest to subsequent lowest point, a 50% drop was experienced).

|

||||||

|

- `Drawdown Start` / `Drawdown End`: Start and end datetimes for this largest drawdown (can also be visualized via the `plot-dataframe` sub-command).

|

||||||

|

- `Market change`: Change of the market during the backtest period. Calculated as average of all pairs changes from the first to the last candle using the "close" column.

|

||||||

|

|

||||||

### Assumptions made by backtesting

|

### Assumptions made by backtesting

|

||||||

|

|

||||||

Since backtesting lacks some detailed information about what happens within a candle, it needs to take a few assumptions:

|

Since backtesting lacks some detailed information about what happens within a candle, it needs to take a few assumptions:

|

||||||

|

|||||||

@@ -5,6 +5,9 @@ This page explains the different parameters of the bot and how to run it.

|

|||||||

!!! Note

|

!!! Note

|

||||||

If you've used `setup.sh`, don't forget to activate your virtual environment (`source .env/bin/activate`) before running freqtrade commands.

|

If you've used `setup.sh`, don't forget to activate your virtual environment (`source .env/bin/activate`) before running freqtrade commands.

|

||||||

|

|

||||||

|

!!! Warning "Up-to-date clock"

|

||||||

|

The clock on the system running the bot must be accurate, synchronized to a NTP server frequently enough to avoid problems with communication to the exchanges.

|

||||||

|

|

||||||

## Bot commands

|

## Bot commands

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|||||||

@@ -55,9 +55,9 @@ Mandatory parameters are marked as **Required**, which means that they are requi

|

|||||||

| `process_only_new_candles` | Enable processing of indicators only when new candles arrive. If false each loop populates the indicators, this will mean the same candle is processed many times creating system load but can be useful of your strategy depends on tick data not only candle. [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `false`.* <br> **Datatype:** Boolean

|

| `process_only_new_candles` | Enable processing of indicators only when new candles arrive. If false each loop populates the indicators, this will mean the same candle is processed many times creating system load but can be useful of your strategy depends on tick data not only candle. [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `false`.* <br> **Datatype:** Boolean

|

||||||

| `minimal_roi` | **Required.** Set the threshold as ratio the bot will use to sell a trade. [More information below](#understand-minimal_roi). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Dict

|

| `minimal_roi` | **Required.** Set the threshold as ratio the bot will use to sell a trade. [More information below](#understand-minimal_roi). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Dict

|

||||||

| `stoploss` | **Required.** Value as ratio of the stoploss used by the bot. More details in the [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Float (as ratio)

|

| `stoploss` | **Required.** Value as ratio of the stoploss used by the bot. More details in the [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Float (as ratio)

|

||||||

| `trailing_stop` | Enables trailing stoploss (based on `stoploss` in either configuration or strategy file). More details in the [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Boolean

|

| `trailing_stop` | Enables trailing stoploss (based on `stoploss` in either configuration or strategy file). More details in the [stoploss documentation](stoploss.md#trailing-stop-loss). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Boolean

|

||||||

| `trailing_stop_positive` | Changes stoploss once profit has been reached. More details in the [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Float

|

| `trailing_stop_positive` | Changes stoploss once profit has been reached. More details in the [stoploss documentation](stoploss.md#trailing-stop-loss-custom-positive-loss). [Strategy Override](#parameters-in-the-strategy). <br> **Datatype:** Float

|

||||||

| `trailing_stop_positive_offset` | Offset on when to apply `trailing_stop_positive`. Percentage value which should be positive. More details in the [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `0.0` (no offset).* <br> **Datatype:** Float

|

| `trailing_stop_positive_offset` | Offset on when to apply `trailing_stop_positive`. Percentage value which should be positive. More details in the [stoploss documentation](stoploss.md#trailing-stop-loss-only-once-the-trade-has-reached-a-certain-offset). [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `0.0` (no offset).* <br> **Datatype:** Float

|

||||||

| `trailing_only_offset_is_reached` | Only apply trailing stoploss when the offset is reached. [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `false`.* <br> **Datatype:** Boolean

|

| `trailing_only_offset_is_reached` | Only apply trailing stoploss when the offset is reached. [stoploss documentation](stoploss.md). [Strategy Override](#parameters-in-the-strategy). <br>*Defaults to `false`.* <br> **Datatype:** Boolean

|

||||||

| `unfilledtimeout.buy` | **Required.** How long (in minutes) the bot will wait for an unfilled buy order to complete, after which the order will be cancelled. [Strategy Override](#parameters-in-the-strategy).<br> **Datatype:** Integer

|

| `unfilledtimeout.buy` | **Required.** How long (in minutes) the bot will wait for an unfilled buy order to complete, after which the order will be cancelled. [Strategy Override](#parameters-in-the-strategy).<br> **Datatype:** Integer

|

||||||

| `unfilledtimeout.sell` | **Required.** How long (in minutes) the bot will wait for an unfilled sell order to complete, after which the order will be cancelled. [Strategy Override](#parameters-in-the-strategy).<br> **Datatype:** Integer

|

| `unfilledtimeout.sell` | **Required.** How long (in minutes) the bot will wait for an unfilled sell order to complete, after which the order will be cancelled. [Strategy Override](#parameters-in-the-strategy).<br> **Datatype:** Integer

|

||||||

@@ -278,24 +278,13 @@ This allows to buy using limit orders, sell using

|

|||||||

limit-orders, and create stoplosses using market orders. It also allows to set the

|

limit-orders, and create stoplosses using market orders. It also allows to set the

|

||||||

stoploss "on exchange" which means stoploss order would be placed immediately once

|

stoploss "on exchange" which means stoploss order would be placed immediately once

|

||||||

the buy order is fulfilled.

|

the buy order is fulfilled.

|

||||||

If `stoploss_on_exchange` and `trailing_stop` are both set, then the bot will use `stoploss_on_exchange_interval` to check and update the stoploss on exchange periodically.

|

|

||||||

`order_types` can be set in the configuration file or in the strategy.

|

|

||||||

`order_types` set in the configuration file overwrites values set in the strategy as a whole, so you need to configure the whole `order_types` dictionary in one place.

|

`order_types` set in the configuration file overwrites values set in the strategy as a whole, so you need to configure the whole `order_types` dictionary in one place.

|

||||||

|

|

||||||

If this is configured, the following 4 values (`buy`, `sell`, `stoploss` and

|

If this is configured, the following 4 values (`buy`, `sell`, `stoploss` and

|

||||||

`stoploss_on_exchange`) need to be present, otherwise the bot will fail to start.

|

`stoploss_on_exchange`) need to be present, otherwise the bot will fail to start.

|

||||||

|

|

||||||

`emergencysell` is an optional value, which defaults to `market` and is used when creating stoploss on exchange orders fails.

|

For information on (`emergencysell`,`stoploss_on_exchange`,`stoploss_on_exchange_interval`,`stoploss_on_exchange_limit_ratio`) please see stop loss documentation [stop loss on exchange](stoploss.md)

|

||||||

The below is the default which is used if this is not configured in either strategy or configuration file.

|

|

||||||

|

|

||||||

Not all Exchanges support `stoploss_on_exchange`. If an exchange supports both limit and market stoploss orders, then the value of `stoploss` will be used to determine the stoploss type.

|

|

||||||

|

|

||||||

If `stoploss_on_exchange` uses limit orders, the exchange needs 2 prices, the stoploss_price and the Limit price.

|

|

||||||

`stoploss` defines the stop-price - and limit should be slightly below this.

|

|

||||||

|

|

||||||

This defaults to 0.99 / 1% (configurable via `stoploss_on_exchange_limit_ratio`).

|

|

||||||

Calculation example: we bought the asset at 100$.

|

|

||||||

Stop-price is 95$, then limit would be `95 * 0.99 = 94.05$` - so the stoploss will happen between 95$ and 94.05$.

|

|

||||||

|

|

||||||

Syntax for Strategy:

|

Syntax for Strategy:

|

||||||

|

|

||||||

@@ -663,24 +652,28 @@ Filters low-value coins which would not allow setting stoplosses.

|

|||||||

#### PriceFilter

|

#### PriceFilter

|

||||||

|

|

||||||

The `PriceFilter` allows filtering of pairs by price. Currently the following price filters are supported:

|

The `PriceFilter` allows filtering of pairs by price. Currently the following price filters are supported:

|

||||||

|

|

||||||

* `min_price`

|

* `min_price`

|

||||||

* `max_price`

|

* `max_price`

|

||||||

* `low_price_ratio`

|

* `low_price_ratio`

|

||||||

|

|

||||||

The `min_price` setting removes pairs where the price is below the specified price. This is useful if you wish to avoid trading very low-priced pairs.

|

The `min_price` setting removes pairs where the price is below the specified price. This is useful if you wish to avoid trading very low-priced pairs.

|

||||||

This option is disabled by default, and will only apply if set to <> 0.

|

This option is disabled by default, and will only apply if set to > 0.

|

||||||

|

|

||||||

The `max_price` setting removes pairs where the price is above the specified price. This is useful if you wish to trade only low-priced pairs.

|

The `max_price` setting removes pairs where the price is above the specified price. This is useful if you wish to trade only low-priced pairs.

|

||||||

This option is disabled by default, and will only apply if set to <> 0.

|

This option is disabled by default, and will only apply if set to > 0.

|

||||||

|

|

||||||

The `low_price_ratio` setting removes pairs where a raise of 1 price unit (pip) is above the `low_price_ratio` ratio.

|

The `low_price_ratio` setting removes pairs where a raise of 1 price unit (pip) is above the `low_price_ratio` ratio.

|

||||||

This option is disabled by default, and will only apply if set to <> 0.

|

This option is disabled by default, and will only apply if set to > 0.

|

||||||

|

|

||||||

|

For `PriceFiler` at least one of its `min_price`, `max_price` or `low_price_ratio` settings must be applied.

|

||||||

|

|

||||||

Calculation example:

|

Calculation example:

|

||||||

|

|

||||||

Min price precision is 8 decimals. If price is 0.00000011 - one step would be 0.00000012 - which is almost 10% higher than the previous value.

|

Min price precision for SHITCOIN/BTC is 8 decimals. If its price is 0.00000011 - one price step above would be 0.00000012, which is ~9% higher than the previous price value. You may filter out this pair by using PriceFilter with `low_price_ratio` set to 0.09 (9%) or with `min_price` set to 0.00000011, correspondingly.

|

||||||

|

|

||||||

These pairs are dangerous since it may be impossible to place the desired stoploss - and often result in high losses.

|

!!! Warning "Low priced pairs"

|

||||||

|

Low priced pairs with high "1 pip movements" are dangerous since they are often illiquid and it may also be impossible to place the desired stoploss, which can often result in high losses since price needs to be rounded to the next tradable price - so instead of having a stoploss of -5%, you could end up with a stoploss of -9% simply due to price rounding.

|

||||||

|

|

||||||

#### ShuffleFilter

|

#### ShuffleFilter

|

||||||

|

|

||||||

|

|||||||

@@ -15,61 +15,91 @@ Otherwise `--exchange` becomes mandatory.

|

|||||||

### Usage

|

### Usage

|

||||||

|

|

||||||

```

|

```

|

||||||

usage: freqtrade download-data [-h] [-v] [--logfile FILE] [-V] [-c PATH] [-d PATH] [--userdir PATH] [-p PAIRS [PAIRS ...]]

|

usage: freqtrade download-data [-h] [-v] [--logfile FILE] [-V] [-c PATH]

|

||||||

[--pairs-file FILE] [--days INT] [--dl-trades] [--exchange EXCHANGE]

|

[-d PATH] [--userdir PATH]

|

||||||

|

[-p PAIRS [PAIRS ...]] [--pairs-file FILE]

|

||||||

|

[--days INT] [--dl-trades]

|

||||||

|

[--exchange EXCHANGE]

|

||||||

[-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]]

|

[-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]]

|

||||||

[--erase] [--data-format-ohlcv {json,jsongz}] [--data-format-trades {json,jsongz}]

|

[--erase]

|

||||||

|

[--data-format-ohlcv {json,jsongz,hdf5}]

|

||||||

|

[--data-format-trades {json,jsongz,hdf5}]

|

||||||

|

|

||||||

optional arguments:

|

optional arguments:

|

||||||

-h, --help show this help message and exit

|

-h, --help show this help message and exit

|

||||||

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

||||||

Show profits for only these pairs. Pairs are space-separated.

|

Show profits for only these pairs. Pairs are space-

|

||||||

|

separated.

|

||||||

--pairs-file FILE File containing a list of pairs to download.

|

--pairs-file FILE File containing a list of pairs to download.

|

||||||

--days INT Download data for given number of days.

|

--days INT Download data for given number of days.

|

||||||

--dl-trades Download trades instead of OHLCV data. The bot will resample trades to the desired timeframe as specified as

|

--dl-trades Download trades instead of OHLCV data. The bot will

|

||||||

--timeframes/-t.

|

resample trades to the desired timeframe as specified

|

||||||

--exchange EXCHANGE Exchange name (default: `bittrex`). Only valid if no config is provided.

|

as --timeframes/-t.

|

||||||

|

--exchange EXCHANGE Exchange name (default: `bittrex`). Only valid if no

|

||||||

|

config is provided.

|

||||||

-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...], --timeframes {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]

|

-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...], --timeframes {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]

|

||||||

Specify which tickers to download. Space-separated list. Default: `1m 5m`.

|

Specify which tickers to download. Space-separated

|

||||||

--erase Clean all existing data for the selected exchange/pairs/timeframes.

|

list. Default: `1m 5m`.

|

||||||

--data-format-ohlcv {json,jsongz}

|

--erase Clean all existing data for the selected

|

||||||

Storage format for downloaded candle (OHLCV) data. (default: `json`).

|

exchange/pairs/timeframes.

|

||||||

--data-format-trades {json,jsongz}

|

--data-format-ohlcv {json,jsongz,hdf5}

|

||||||

Storage format for downloaded trades data. (default: `jsongz`).

|

Storage format for downloaded candle (OHLCV) data.

|

||||||

|

(default: `json`).

|

||||||

|

--data-format-trades {json,jsongz,hdf5}

|

||||||

|

Storage format for downloaded trades data. (default:

|

||||||

|

`jsongz`).

|

||||||

|

|

||||||

Common arguments:

|

Common arguments:

|

||||||

-v, --verbose Verbose mode (-vv for more, -vvv to get all messages).

|

-v, --verbose Verbose mode (-vv for more, -vvv to get all messages).

|

||||||

--logfile FILE Log to the file specified. Special values are: 'syslog', 'journald'. See the documentation for more details.

|

--logfile FILE Log to the file specified. Special values are:

|

||||||

|

'syslog', 'journald'. See the documentation for more

|

||||||

|

details.

|

||||||

-V, --version show program's version number and exit

|

-V, --version show program's version number and exit

|

||||||

-c PATH, --config PATH

|

-c PATH, --config PATH

|

||||||

Specify configuration file (default: `config.json`). Multiple --config options may be used. Can be set to `-`

|

Specify configuration file (default:

|

||||||

to read config from stdin.

|

`userdir/config.json` or `config.json` whichever

|

||||||

|

exists). Multiple --config options may be used. Can be

|

||||||

|

set to `-` to read config from stdin.

|

||||||

-d PATH, --datadir PATH

|

-d PATH, --datadir PATH

|

||||||

Path to directory with historical backtesting data.

|

Path to directory with historical backtesting data.

|

||||||

--userdir PATH, --user-data-dir PATH

|

--userdir PATH, --user-data-dir PATH

|

||||||

Path to userdata directory.

|

Path to userdata directory.

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

### Data format

|

### Data format

|

||||||

|

|

||||||

Freqtrade currently supports 2 dataformats, `json` (plain "text" json files) and `jsongz` (a gzipped version of json files).

|

Freqtrade currently supports 3 data-formats for both OHLCV and trades data:

|

||||||

|

|

||||||

|

* `json` (plain "text" json files)

|

||||||

|

* `jsongz` (a gzip-zipped version of json files)

|

||||||

|

* `hdf5` (a high performance datastore)

|

||||||

|

|

||||||

By default, OHLCV data is stored as `json` data, while trades data is stored as `jsongz` data.

|

By default, OHLCV data is stored as `json` data, while trades data is stored as `jsongz` data.

|

||||||

|

|

||||||

This can be changed via the `--data-format-ohlcv` and `--data-format-trades` parameters respectivly.

|

This can be changed via the `--data-format-ohlcv` and `--data-format-trades` command line arguments respectively.

|

||||||

|

To persist this change, you can should also add the following snippet to your configuration, so you don't have to insert the above arguments each time:

|

||||||

|

|

||||||

If the default dataformat has been changed during download, then the keys `dataformat_ohlcv` and `dataformat_trades` in the configuration file need to be adjusted to the selected dataformat as well.

|

``` jsonc

|

||||||

|

// ...

|

||||||

|

"dataformat_ohlcv": "hdf5",

|

||||||

|

"dataformat_trades": "hdf5",

|

||||||

|

// ...

|

||||||

|

```

|

||||||

|

|

||||||

|

If the default data-format has been changed during download, then the keys `dataformat_ohlcv` and `dataformat_trades` in the configuration file need to be adjusted to the selected dataformat as well.

|

||||||

|

|

||||||

!!! Note

|

!!! Note

|

||||||

You can convert between data-formats using the [convert-data](#subcommand-convert-data) and [convert-trade-data](#subcommand-convert-trade-data) methods.

|

You can convert between data-formats using the [convert-data](#sub-command-convert-data) and [convert-trade-data](#sub-command-convert-trade-data) methods.

|

||||||

|

|

||||||

#### Subcommand convert data

|

#### Sub-command convert data

|

||||||

|

|

||||||

```

|

```

|

||||||

usage: freqtrade convert-data [-h] [-v] [--logfile FILE] [-V] [-c PATH]

|

usage: freqtrade convert-data [-h] [-v] [--logfile FILE] [-V] [-c PATH]

|

||||||

[-d PATH] [--userdir PATH]

|

[-d PATH] [--userdir PATH]

|

||||||

[-p PAIRS [PAIRS ...]] --format-from

|

[-p PAIRS [PAIRS ...]] --format-from

|

||||||

{json,jsongz} --format-to {json,jsongz}

|

{json,jsongz,hdf5} --format-to

|

||||||

[--erase]

|

{json,jsongz,hdf5} [--erase]

|

||||||

[-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]]

|

[-t {1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} [{1m,3m,5m,15m,30m,1h,2h,4h,6h,8h,12h,1d,3d,1w} ...]]

|

||||||

|

|

||||||

optional arguments:

|

optional arguments:

|

||||||

@@ -77,9 +107,9 @@ optional arguments:

|

|||||||

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

||||||

Show profits for only these pairs. Pairs are space-

|

Show profits for only these pairs. Pairs are space-

|

||||||

separated.

|

separated.

|

||||||

--format-from {json,jsongz}

|

--format-from {json,jsongz,hdf5}

|

||||||

Source format for data conversion.

|

Source format for data conversion.

|

||||||

--format-to {json,jsongz}

|

--format-to {json,jsongz,hdf5}

|

||||||

Destination format for data conversion.

|

Destination format for data conversion.

|

||||||

--erase Clean all existing data for the selected

|

--erase Clean all existing data for the selected

|

||||||

exchange/pairs/timeframes.

|

exchange/pairs/timeframes.

|

||||||

@@ -94,9 +124,10 @@ Common arguments:

|

|||||||

details.

|

details.

|

||||||

-V, --version show program's version number and exit

|

-V, --version show program's version number and exit

|

||||||

-c PATH, --config PATH

|

-c PATH, --config PATH

|

||||||

Specify configuration file (default: `config.json`).

|

Specify configuration file (default:

|

||||||

Multiple --config options may be used. Can be set to

|

`userdir/config.json` or `config.json` whichever

|

||||||

`-` to read config from stdin.

|

exists). Multiple --config options may be used. Can be

|

||||||

|

set to `-` to read config from stdin.

|

||||||

-d PATH, --datadir PATH

|

-d PATH, --datadir PATH

|

||||||

Path to directory with historical backtesting data.

|

Path to directory with historical backtesting data.

|

||||||

--userdir PATH, --user-data-dir PATH

|

--userdir PATH, --user-data-dir PATH

|

||||||

@@ -112,23 +143,23 @@ It'll also remove original json data files (`--erase` parameter).

|

|||||||

freqtrade convert-data --format-from json --format-to jsongz --datadir ~/.freqtrade/data/binance -t 5m 15m --erase

|

freqtrade convert-data --format-from json --format-to jsongz --datadir ~/.freqtrade/data/binance -t 5m 15m --erase

|

||||||

```

|

```

|

||||||

|

|

||||||

#### Subcommand convert-trade data

|

#### Sub-command convert trade data

|

||||||

|

|

||||||

```

|

```

|

||||||

usage: freqtrade convert-trade-data [-h] [-v] [--logfile FILE] [-V] [-c PATH]

|

usage: freqtrade convert-trade-data [-h] [-v] [--logfile FILE] [-V] [-c PATH]

|

||||||

[-d PATH] [--userdir PATH]

|

[-d PATH] [--userdir PATH]

|

||||||

[-p PAIRS [PAIRS ...]] --format-from

|

[-p PAIRS [PAIRS ...]] --format-from

|

||||||

{json,jsongz} --format-to {json,jsongz}

|

{json,jsongz,hdf5} --format-to

|

||||||

[--erase]

|

{json,jsongz,hdf5} [--erase]

|

||||||

|

|

||||||

optional arguments:

|

optional arguments:

|

||||||

-h, --help show this help message and exit

|

-h, --help show this help message and exit

|

||||||

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

||||||

Show profits for only these pairs. Pairs are space-

|

Show profits for only these pairs. Pairs are space-

|

||||||

separated.

|

separated.

|

||||||

--format-from {json,jsongz}

|

--format-from {json,jsongz,hdf5}

|

||||||

Source format for data conversion.

|

Source format for data conversion.

|

||||||

--format-to {json,jsongz}

|

--format-to {json,jsongz,hdf5}

|

||||||

Destination format for data conversion.

|

Destination format for data conversion.

|

||||||

--erase Clean all existing data for the selected

|

--erase Clean all existing data for the selected

|

||||||

exchange/pairs/timeframes.

|

exchange/pairs/timeframes.

|

||||||

@@ -140,13 +171,15 @@ Common arguments:

|

|||||||

details.

|

details.

|

||||||

-V, --version show program's version number and exit

|

-V, --version show program's version number and exit

|

||||||

-c PATH, --config PATH

|

-c PATH, --config PATH

|

||||||

Specify configuration file (default: `config.json`).

|

Specify configuration file (default:

|

||||||

Multiple --config options may be used. Can be set to

|

`userdir/config.json` or `config.json` whichever

|

||||||

`-` to read config from stdin.

|

exists). Multiple --config options may be used. Can be

|

||||||

|

set to `-` to read config from stdin.

|

||||||

-d PATH, --datadir PATH

|

-d PATH, --datadir PATH

|

||||||

Path to directory with historical backtesting data.

|

Path to directory with historical backtesting data.

|

||||||

--userdir PATH, --user-data-dir PATH

|

--userdir PATH, --user-data-dir PATH

|

||||||

Path to userdata directory.

|

Path to userdata directory.

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

##### Example converting trades

|

##### Example converting trades

|

||||||

@@ -158,21 +191,21 @@ It'll also remove original jsongz data files (`--erase` parameter).

|

|||||||

freqtrade convert-trade-data --format-from jsongz --format-to json --datadir ~/.freqtrade/data/kraken --erase

|

freqtrade convert-trade-data --format-from jsongz --format-to json --datadir ~/.freqtrade/data/kraken --erase

|

||||||

```

|

```

|

||||||

|

|

||||||

### Subcommand list-data

|

### Sub-command list-data

|

||||||

|

|

||||||

You can get a list of downloaded data using the `list-data` subcommand.

|

You can get a list of downloaded data using the `list-data` sub-command.

|

||||||

|

|

||||||

```

|

```

|

||||||

usage: freqtrade list-data [-h] [-v] [--logfile FILE] [-V] [-c PATH] [-d PATH]

|

usage: freqtrade list-data [-h] [-v] [--logfile FILE] [-V] [-c PATH] [-d PATH]

|

||||||

[--userdir PATH] [--exchange EXCHANGE]

|

[--userdir PATH] [--exchange EXCHANGE]

|

||||||

[--data-format-ohlcv {json,jsongz}]

|

[--data-format-ohlcv {json,jsongz,hdf5}]

|

||||||

[-p PAIRS [PAIRS ...]]

|

[-p PAIRS [PAIRS ...]]

|

||||||

|

|

||||||

optional arguments:

|

optional arguments:

|

||||||

-h, --help show this help message and exit

|

-h, --help show this help message and exit

|

||||||

--exchange EXCHANGE Exchange name (default: `bittrex`). Only valid if no

|

--exchange EXCHANGE Exchange name (default: `bittrex`). Only valid if no

|

||||||

config is provided.

|

config is provided.

|

||||||

--data-format-ohlcv {json,jsongz}

|

--data-format-ohlcv {json,jsongz,hdf5}

|

||||||

Storage format for downloaded candle (OHLCV) data.

|

Storage format for downloaded candle (OHLCV) data.

|

||||||

(default: `json`).

|

(default: `json`).

|

||||||

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

-p PAIRS [PAIRS ...], --pairs PAIRS [PAIRS ...]

|

||||||

@@ -194,6 +227,7 @@ Common arguments:

|

|||||||

Path to directory with historical backtesting data.

|

Path to directory with historical backtesting data.

|

||||||

--userdir PATH, --user-data-dir PATH

|

--userdir PATH, --user-data-dir PATH

|

||||||

Path to userdata directory.

|

Path to userdata directory.

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

#### Example list-data

|

#### Example list-data

|

||||||

@@ -249,7 +283,7 @@ This will download historical candle (OHLCV) data for all the currency pairs you

|

|||||||

### Other Notes

|

### Other Notes

|

||||||

|

|

||||||

- To use a different directory than the exchange specific default, use `--datadir user_data/data/some_directory`.

|

- To use a different directory than the exchange specific default, use `--datadir user_data/data/some_directory`.

|

||||||

- To change the exchange used to download the historical data from, please use a different configuration file (you'll probably need to adjust ratelimits etc.)

|

- To change the exchange used to download the historical data from, please use a different configuration file (you'll probably need to adjust rate limits etc.)

|

||||||

- To use `pairs.json` from some other directory, use `--pairs-file some_other_dir/pairs.json`.

|

- To use `pairs.json` from some other directory, use `--pairs-file some_other_dir/pairs.json`.

|

||||||

- To download historical candle (OHLCV) data for only 10 days, use `--days 10` (defaults to 30 days).

|

- To download historical candle (OHLCV) data for only 10 days, use `--days 10` (defaults to 30 days).

|

||||||

- Use `--timeframes` to specify what timeframe download the historical candle (OHLCV) data for. Default is `--timeframes 1m 5m` which will download 1-minute and 5-minute data.

|

- Use `--timeframes` to specify what timeframe download the historical candle (OHLCV) data for. Default is `--timeframes 1m 5m` which will download 1-minute and 5-minute data.

|

||||||

@@ -257,7 +291,7 @@ This will download historical candle (OHLCV) data for all the currency pairs you

|

|||||||

|

|

||||||

### Trades (tick) data

|

### Trades (tick) data

|

||||||

|

|

||||||

By default, `download-data` subcommand downloads Candles (OHLCV) data. Some exchanges also provide historic trade-data via their API.

|

By default, `download-data` sub-command downloads Candles (OHLCV) data. Some exchanges also provide historic trade-data via their API.

|

||||||

This data can be useful if you need many different timeframes, since it is only downloaded once, and then resampled locally to the desired timeframes.

|

This data can be useful if you need many different timeframes, since it is only downloaded once, and then resampled locally to the desired timeframes.

|

||||||

|

|

||||||

Since this data is large by default, the files use gzip by default. They are stored in your data-directory with the naming convention of `<pair>-trades.json.gz` (`ETH_BTC-trades.json.gz`). Incremental mode is also supported, as for historic OHLCV data, so downloading the data once per week with `--days 8` will create an incremental data-repository.

|

Since this data is large by default, the files use gzip by default. They are stored in your data-directory with the naming convention of `<pair>-trades.json.gz` (`ETH_BTC-trades.json.gz`). Incremental mode is also supported, as for historic OHLCV data, so downloading the data once per week with `--days 8` will create an incremental data-repository.

|

||||||

|

|||||||

@@ -9,21 +9,20 @@ and are no longer supported. Please avoid their usage in your configuration.

|

|||||||

### the `--refresh-pairs-cached` command line option

|

### the `--refresh-pairs-cached` command line option

|

||||||

|

|

||||||

`--refresh-pairs-cached` in the context of backtesting, hyperopt and edge allows to refresh candle data for backtesting.

|

`--refresh-pairs-cached` in the context of backtesting, hyperopt and edge allows to refresh candle data for backtesting.

|

||||||

Since this leads to much confusion, and slows down backtesting (while not being part of backtesting) this has been singled out

|

Since this leads to much confusion, and slows down backtesting (while not being part of backtesting) this has been singled out as a separate freqtrade sub-command `freqtrade download-data`.

|

||||||

as a seperate freqtrade subcommand `freqtrade download-data`.

|

|

||||||

|

|

||||||

This command line option was deprecated in 2019.7-dev (develop branch) and removed in 2019.9 (master branch).

|

This command line option was deprecated in 2019.7-dev (develop branch) and removed in 2019.9.

|

||||||

|

|

||||||

### The **--dynamic-whitelist** command line option

|

### The **--dynamic-whitelist** command line option

|

||||||

|

|

||||||

This command line option was deprecated in 2018 and removed freqtrade 2019.6-dev (develop branch)

|

This command line option was deprecated in 2018 and removed freqtrade 2019.6-dev (develop branch)

|

||||||

and in freqtrade 2019.7 (master branch).

|

and in freqtrade 2019.7.

|

||||||

|

|

||||||

### the `--live` command line option

|

### the `--live` command line option

|

||||||

|

|

||||||

`--live` in the context of backtesting allowed to download the latest tick data for backtesting.

|

`--live` in the context of backtesting allowed to download the latest tick data for backtesting.

|

||||||

Did only download the latest 500 candles, so was ineffective in getting good backtest data.

|

Did only download the latest 500 candles, so was ineffective in getting good backtest data.

|

||||||

Removed in 2019-7-dev (develop branch) and in freqtrade 2019-8 (master branch)

|

Removed in 2019-7-dev (develop branch) and in freqtrade 2019.8.

|

||||||

|

|

||||||

### Allow running multiple pairlists in sequence

|

### Allow running multiple pairlists in sequence

|

||||||

|

|

||||||

@@ -31,6 +30,6 @@ The former `"pairlist"` section in the configuration has been removed, and is re

|

|||||||

|

|

||||||

The old section of configuration parameters (`"pairlist"`) has been deprecated in 2019.11 and has been removed in 2020.4.

|

The old section of configuration parameters (`"pairlist"`) has been deprecated in 2019.11 and has been removed in 2020.4.

|

||||||

|

|

||||||

### deprecation of bidVolume and askVolume from volumepairlist

|

### deprecation of bidVolume and askVolume from volume-pairlist

|

||||||

|

|

||||||

Since only quoteVolume can be compared between assets, the other options (bidVolume, askVolume) have been deprecated in 2020.4.

|

Since only quoteVolume can be compared between assets, the other options (bidVolume, askVolume) have been deprecated in 2020.4, and have been removed in 2020.9.

|

||||||

|

|||||||

@@ -10,6 +10,15 @@ Documentation is available at [https://freqtrade.io](https://www.freqtrade.io/)

|

|||||||

|

|

||||||

Special fields for the documentation (like Note boxes, ...) can be found [here](https://squidfunk.github.io/mkdocs-material/extensions/admonition/).

|

Special fields for the documentation (like Note boxes, ...) can be found [here](https://squidfunk.github.io/mkdocs-material/extensions/admonition/).

|

||||||

|

|

||||||

|

To test the documentation locally use the following commands.

|

||||||

|

|

||||||

|

``` bash

|

||||||

|

pip install -r docs/requirements-docs.txt

|

||||||

|

mkdocs serve

|

||||||

|

```

|

||||||

|

|

||||||

|

This will spin up a local server (usually on port 8000) so you can see if everything looks as you'd like it to.

|

||||||

|

|

||||||

## Developer setup

|

## Developer setup

|

||||||

|

|

||||||

To configure a development environment, best use the `setup.sh` script and answer "y" when asked "Do you want to install dependencies for dev [y/N]? ".

|

To configure a development environment, best use the `setup.sh` script and answer "y" when asked "Do you want to install dependencies for dev [y/N]? ".

|

||||||

@@ -52,6 +61,7 @@ The fastest and easiest way to start up is to use docker-compose.develop which g

|

|||||||

* [docker-compose](https://docs.docker.com/compose/install/)

|

* [docker-compose](https://docs.docker.com/compose/install/)

|

||||||

|

|

||||||

#### Starting the bot

|

#### Starting the bot

|

||||||

|

|

||||||

##### Use the develop dockerfile

|

##### Use the develop dockerfile

|

||||||

|

|

||||||

``` bash

|

``` bash

|

||||||

@@ -74,7 +84,7 @@ docker-compose up

|

|||||||

docker-compose build

|

docker-compose build

|

||||||

```

|

```

|

||||||

|

|

||||||

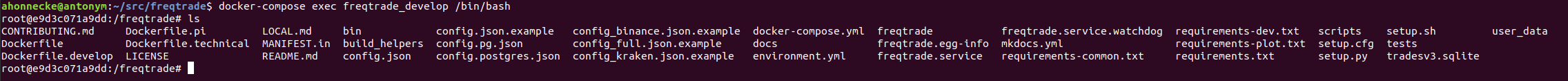

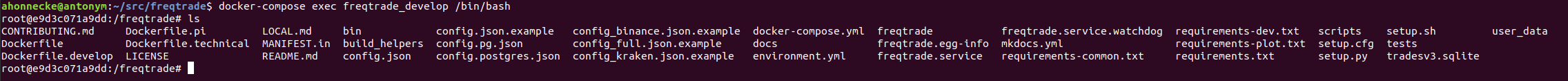

##### Execing (effectively SSH into the container)

|

##### Executing (effectively SSH into the container)

|

||||||

|

|

||||||

The `exec` command requires that the container already be running, if you want to start it

|

The `exec` command requires that the container already be running, if you want to start it

|

||||||

that can be effected by `docker-compose up` or `docker-compose run freqtrade_develop`

|

that can be effected by `docker-compose up` or `docker-compose run freqtrade_develop`

|

||||||

@@ -85,6 +95,35 @@ docker-compose exec freqtrade_develop /bin/bash

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## ErrorHandling

|

||||||

|

|

||||||

|

Freqtrade Exceptions all inherit from `FreqtradeException`.

|

||||||

|

This general class of error should however not be used directly. Instead, multiple specialized sub-Exceptions exist.

|

||||||

|

|

||||||

|

Below is an outline of exception inheritance hierarchy:

|

||||||

|

|

||||||

|

```

|

||||||

|

+ FreqtradeException

|

||||||

|

|

|

||||||

|

+---+ OperationalException

|

||||||

|

|

|

||||||

|

+---+ DependencyException

|

||||||

|

| |

|

||||||

|

| +---+ PricingError

|

||||||

|

| |

|

||||||

|

| +---+ ExchangeError

|

||||||

|

| |

|

||||||

|

| +---+ TemporaryError

|

||||||

|

| |

|

||||||

|

| +---+ DDosProtection

|

||||||

|

| |

|

||||||

|

| +---+ InvalidOrderException

|

||||||

|

| |

|

||||||

|

| +---+ RetryableOrderError

|

||||||

|

|

|

||||||

|

+---+ StrategyError

|

||||||

|

```

|

||||||

|

|

||||||

## Modules

|

## Modules

|

||||||

|

|

||||||

### Dynamic Pairlist

|

### Dynamic Pairlist

|

||||||

@@ -98,7 +137,7 @@ First of all, have a look at the [VolumePairList](https://github.com/freqtrade/f

|

|||||||

|

|

||||||

This is a simple Handler, which however serves as a good example on how to start developing.

|

This is a simple Handler, which however serves as a good example on how to start developing.

|

||||||

|

|

||||||

Next, modify the classname of the Handler (ideally align this with the module filename).

|

Next, modify the class-name of the Handler (ideally align this with the module filename).

|

||||||

|

|

||||||

The base-class provides an instance of the exchange (`self._exchange`) the pairlist manager (`self._pairlistmanager`), as well as the main configuration (`self._config`), the pairlist dedicated configuration (`self._pairlistconfig`) and the absolute position within the list of pairlists.

|

The base-class provides an instance of the exchange (`self._exchange`) the pairlist manager (`self._pairlistmanager`), as well as the main configuration (`self._config`), the pairlist dedicated configuration (`self._pairlistconfig`) and the absolute position within the list of pairlists.

|

||||||

|

|

||||||

@@ -118,7 +157,7 @@ Configuration for the chain of Pairlist Handlers is done in the bot configuratio

|

|||||||

|

|

||||||

By convention, `"number_assets"` is used to specify the maximum number of pairs to keep in the pairlist. Please follow this to ensure a consistent user experience.

|

By convention, `"number_assets"` is used to specify the maximum number of pairs to keep in the pairlist. Please follow this to ensure a consistent user experience.

|

||||||

|

|

||||||

Additional parameters can be configured as needed. For instance, `VolumePairList` uses `"sort_key"` to specify the sorting value - however feel free to specify whatever is necessary for your great algorithm to be successfull and dynamic.

|

Additional parameters can be configured as needed. For instance, `VolumePairList` uses `"sort_key"` to specify the sorting value - however feel free to specify whatever is necessary for your great algorithm to be successful and dynamic.

|

||||||

|

|

||||||

#### short_desc

|

#### short_desc

|

||||||

|

|

||||||

@@ -134,7 +173,7 @@ This is called with each iteration of the bot (only if the Pairlist Handler is a

|

|||||||

|

|

||||||

It must return the resulting pairlist (which may then be passed into the chain of Pairlist Handlers).

|

It must return the resulting pairlist (which may then be passed into the chain of Pairlist Handlers).

|

||||||

|

|

||||||

Validations are optional, the parent class exposes a `_verify_blacklist(pairlist)` and `_whitelist_for_active_markets(pairlist)` to do default filtering. Use this if you limit your result to a certain number of pairs - so the endresult is not shorter than expected.

|

Validations are optional, the parent class exposes a `_verify_blacklist(pairlist)` and `_whitelist_for_active_markets(pairlist)` to do default filtering. Use this if you limit your result to a certain number of pairs - so the end-result is not shorter than expected.

|

||||||

|

|

||||||

#### filter_pairlist

|

#### filter_pairlist

|

||||||

|

|

||||||

@@ -142,13 +181,13 @@ This method is called for each Pairlist Handler in the chain by the pairlist man

|

|||||||

|

|

||||||

This is called with each iteration of the bot - so consider implementing caching for compute/network heavy calculations.

|

This is called with each iteration of the bot - so consider implementing caching for compute/network heavy calculations.

|

||||||

|

|

||||||

It get's passed a pairlist (which can be the result of previous pairlists) as well as `tickers`, a pre-fetched version of `get_tickers()`.

|

It gets passed a pairlist (which can be the result of previous pairlists) as well as `tickers`, a pre-fetched version of `get_tickers()`.

|

||||||

|

|

||||||

The default implementation in the base class simply calls the `_validate_pair()` method for each pair in the pairlist, but you may override it. So you should either implement the `_validate_pair()` in your Pairlist Handler or override `filter_pairlist()` to do something else.

|

The default implementation in the base class simply calls the `_validate_pair()` method for each pair in the pairlist, but you may override it. So you should either implement the `_validate_pair()` in your Pairlist Handler or override `filter_pairlist()` to do something else.

|

||||||

|

|

||||||

If overridden, it must return the resulting pairlist (which may then be passed into the next Pairlist Handler in the chain).

|

If overridden, it must return the resulting pairlist (which may then be passed into the next Pairlist Handler in the chain).

|

||||||

|

|

||||||

Validations are optional, the parent class exposes a `_verify_blacklist(pairlist)` and `_whitelist_for_active_markets(pairlist)` to do default filters. Use this if you limit your result to a certain number of pairs - so the endresult is not shorter than expected.

|

Validations are optional, the parent class exposes a `_verify_blacklist(pairlist)` and `_whitelist_for_active_markets(pairlist)` to do default filters. Use this if you limit your result to a certain number of pairs - so the end result is not shorter than expected.

|

||||||

|

|

||||||

In `VolumePairList`, this implements different methods of sorting, does early validation so only the expected number of pairs is returned.

|

In `VolumePairList`, this implements different methods of sorting, does early validation so only the expected number of pairs is returned.

|

||||||

|

|

||||||

@@ -172,7 +211,7 @@ Most exchanges supported by CCXT should work out of the box.

|

|||||||

|

|

||||||

Check if the new exchange supports Stoploss on Exchange orders through their API.

|

Check if the new exchange supports Stoploss on Exchange orders through their API.

|

||||||

|

|

||||||

Since CCXT does not provide unification for Stoploss On Exchange yet, we'll need to implement the exchange-specific parameters ourselfs. Best look at `binance.py` for an example implementation of this. You'll need to dig through the documentation of the Exchange's API on how exactly this can be done. [CCXT Issues](https://github.com/ccxt/ccxt/issues) may also provide great help, since others may have implemented something similar for their projects.

|

Since CCXT does not provide unification for Stoploss On Exchange yet, we'll need to implement the exchange-specific parameters ourselves. Best look at `binance.py` for an example implementation of this. You'll need to dig through the documentation of the Exchange's API on how exactly this can be done. [CCXT Issues](https://github.com/ccxt/ccxt/issues) may also provide great help, since others may have implemented something similar for their projects.

|

||||||

|

|

||||||

### Incomplete candles

|

### Incomplete candles

|

||||||

|

|

||||||

@@ -245,6 +284,7 @@ git checkout -b new_release <commitid>

|

|||||||

|

|

||||||

Determine if crucial bugfixes have been made between this commit and the current state, and eventually cherry-pick these.

|

Determine if crucial bugfixes have been made between this commit and the current state, and eventually cherry-pick these.

|

||||||

|

|

||||||

|

* Merge the release branch (master) into this branch.

|

||||||

* Edit `freqtrade/__init__.py` and add the version matching the current date (for example `2019.7` for July 2019). Minor versions can be `2019.7.1` should we need to do a second release that month. Version numbers must follow allowed versions from PEP0440 to avoid failures pushing to pypi.

|

* Edit `freqtrade/__init__.py` and add the version matching the current date (for example `2019.7` for July 2019). Minor versions can be `2019.7.1` should we need to do a second release that month. Version numbers must follow allowed versions from PEP0440 to avoid failures pushing to pypi.

|

||||||

* Commit this part

|

* Commit this part

|

||||||

* push that branch to the remote and create a PR against the master branch

|

* push that branch to the remote and create a PR against the master branch

|

||||||

@@ -252,14 +292,14 @@ Determine if crucial bugfixes have been made between this commit and the current

|

|||||||

### Create changelog from git commits

|

### Create changelog from git commits

|

||||||

|

|

||||||

!!! Note

|

!!! Note

|

||||||

Make sure that the master branch is uptodate!

|

Make sure that the master branch is up-to-date!

|

||||||

|

|

||||||

``` bash

|

``` bash

|

||||||

# Needs to be done before merging / pulling that branch.

|

# Needs to be done before merging / pulling that branch.

|

||||||

git log --oneline --no-decorate --no-merges master..new_release

|

git log --oneline --no-decorate --no-merges master..new_release

|

||||||

```

|

```

|

||||||

|

|

||||||

To keep the release-log short, best wrap the full git changelog into a collapsible details secction.

|

To keep the release-log short, best wrap the full git changelog into a collapsible details section.

|

||||||

|

|

||||||

```markdown

|

```markdown

|

||||||

<details>

|

<details>

|

||||||

@@ -283,6 +323,9 @@ Once the PR against master is merged (best right after merging):

|

|||||||

|

|

||||||

### pypi

|

### pypi

|

||||||

|

|

||||||

|

!!! Note

|

||||||

|

This process is now automated as part of Github Actions.

|

||||||

|

|

||||||

To create a pypi release, please run the following commands:

|

To create a pypi release, please run the following commands:

|

||||||

|

|

||||||

Additional requirement: `wheel`, `twine` (for uploading), account on pypi with proper permissions.

|

Additional requirement: `wheel`, `twine` (for uploading), account on pypi with proper permissions.

|

||||||

|

|||||||

206

docs/docker.md

206

docs/docker.md

@@ -1,145 +1,7 @@

|

|||||||

# Using Freqtrade with Docker

|

|

||||||

|

|

||||||

## Install Docker

|

|

||||||

|

|

||||||

Start by downloading and installing Docker CE for your platform:

|

|

||||||

|

|

||||||

* [Mac](https://docs.docker.com/docker-for-mac/install/)

|

|

||||||

* [Windows](https://docs.docker.com/docker-for-windows/install/)

|

|

||||||

* [Linux](https://docs.docker.com/install/)

|

|

||||||

|

|

||||||

Optionally, [docker-compose](https://docs.docker.com/compose/install/) should be installed and available to follow the [docker quick start guide](#docker-quick-start).

|

|

||||||

|

|

||||||

Once you have Docker installed, simply prepare the config file (e.g. `config.json`) and run the image for `freqtrade` as explained below.

|

|

||||||

|

|

||||||

## Freqtrade with docker-compose

|

|

||||||

|

|

||||||

Freqtrade provides an official Docker image on [Dockerhub](https://hub.docker.com/r/freqtradeorg/freqtrade/), as well as a [docker-compose file](https://github.com/freqtrade/freqtrade/blob/develop/docker-compose.yml) ready for usage.

|

|

||||||

|

|

||||||

!!! Note

|

|

||||||

The following section assumes that docker and docker-compose is installed and available to the logged in user.

|

|

||||||

|

|

||||||

!!! Note

|

|

||||||

All below comands use relative directories and will have to be executed from the directory containing the `docker-compose.yml` file.

|

|

||||||

|

|

||||||

!!! Note "Docker on Raspberry"

|

|

||||||

If you're running freqtrade on a Raspberry PI, you must change the image from `freqtradeorg/freqtrade:master` to `freqtradeorg/freqtrade:master_pi` or `freqtradeorg/freqtrade:develop_pi`, otherwise the image will not work.

|

|

||||||

|

|

||||||

### Docker quick start

|

|

||||||

|

|

||||||

Create a new directory and place the [docker-compose file](https://github.com/freqtrade/freqtrade/blob/develop/docker-compose.yml) in this directory.

|

|

||||||

|

|

||||||

``` bash

|

|

||||||

mkdir ft_userdata

|

|

||||||

cd ft_userdata/

|

|

||||||

# Download the docker-compose file from the repository

|

|

||||||

curl https://raw.githubusercontent.com/freqtrade/freqtrade/develop/docker-compose.yml -o docker-compose.yml

|

|

||||||

|

|

||||||

# Pull the freqtrade image

|

|

||||||

docker-compose pull

|

|

||||||

|

|

||||||

# Create user directory structure

|

|

||||||

docker-compose run --rm freqtrade create-userdir --userdir user_data

|

|

||||||

|

|

||||||

# Create configuration - Requires answering interactive questions

|

|

||||||

docker-compose run --rm freqtrade new-config --config user_data/config.json

|

|

||||||

```

|

|

||||||

|

|

||||||

The above snippet creates a new directory called "ft_userdata", downloads the latest compose file and pulls the freqtrade image.

|

|

||||||

The last 2 steps in the snippet create the directory with user-data, as well as (interactively) the default configuration based on your selections.

|

|

||||||

|

|

||||||

!!! Note

|

|

||||||

You can edit the configuration at any time, which is available as `user_data/config.json` (within the directory `ft_userdata`) when using the above configuration.

|

|

||||||

|

|

||||||

#### Adding your strategy

|

|

||||||

|

|

||||||

The configuration is now available as `user_data/config.json`.

|

|

||||||

You should now copy your strategy to `user_data/strategies/` - and add the Strategy class name to the `docker-compose.yml` file, replacing `SampleStrategy`. If you wish to run the bot with the SampleStrategy, just leave it as it is.

|

|

||||||

|

|

||||||

!!! Warning

|

|

||||||

The `SampleStrategy` is there for your reference and give you ideas for your own strategy.

|

|

||||||

Please always backtest the strategy and use dry-run for some time before risking real money!

|

|

||||||

|

|

||||||

Once this is done, you're ready to launch the bot in trading mode (Dry-run or Live-trading, depending on your answer to the corresponding question you made above).

|

|

||||||

|

|

||||||

``` bash

|

|

||||||

docker-compose up -d

|

|

||||||

```

|

|

||||||

|

|

||||||

#### Docker-compose logs

|

|

||||||

|

|

||||||

Logs will be written to `user_data/logs/freqtrade.log`.

|

|

||||||

Alternatively, you can check the latest logs using `docker-compose logs -f`.

|

|

||||||

|

|

||||||

#### Database

|

|

||||||

|

|

||||||

The database will be in the user_data directory as well, and will be called `user_data/tradesv3.sqlite`.

|

|

||||||

|

|

||||||

#### Updating freqtrade with docker-compose

|

|

||||||

|

|

||||||

To update freqtrade when using docker-compose is as simple as running the following 2 commands:

|

|

||||||

|

|

||||||

``` bash

|

|

||||||

# Download the latest image

|

|

||||||

docker-compose pull

|

|

||||||

# Restart the image

|

|

||||||

docker-compose up -d

|

|

||||||

```

|

|

||||||

|

|

||||||

This will first pull the latest image, and will then restart the container with the just pulled version.

|

|

||||||

|

|

||||||

!!! Note

|

|

||||||

You should always check the changelog for breaking changes / manual interventions required and make sure the bot starts correctly after the update.

|

|

||||||

|

|

||||||

#### Going from here

|

|

||||||

|

|

||||||

Advanced users may edit the docker-compose file further to include all possible options or arguments.

|

|

||||||

|

|

||||||